Learn what split testing is and how to do it, along with the elements you can test and tips to evaluate your experiment’s performance.

As PPC marketers, we constantly hear about the value of split testing – an essential practice for identifying what performs best and for driving long-term improvements in our accounts.

It sounds straightforward: test, analyze and apply what works.

Yet, while the benefits of split testing are widely discussed, guidance on how to conduct it effectively is often missing.

This article aims to bridge that gap by walking you through efficient and effective split testing methods in PPC so you can maximize results and ROI.

What is split testing?

Split testing, or A/B testing, is a method of comparing two variations of an element, such as ad copy or call to action (CTA), to determine which performs better.

For example, you might test a “Book a demo” CTA against a “Schedule a call” CTA to see which drives more clicks.

Another related technique, multivariable testing, involves testing multiple variables at once, such as the landing page layout, ad copy or CTA. This can yield deeper insights but requires more data and planning.

In this article, you’ll focus on traditional split testing – testing one element against another.

Once you’re comfortable with this, you can experiment with more complex, multivariable tests.

Dig deeper: How to develop PPC testing strategies

Why is split testing important?

While you may feel confident about what works in your account, relying on data-driven insights from split testing can reveal unexpected opportunities for improvement.

Split testing provides concrete evidence of what resonates with your audience rather than relying on assumptions or preferences.

Additionally, it allows you to test new strategies on a small scale before committing to larger changes.

What to split test

The first step in split testing is deciding what you want to test, ideally with a clear hypothesis that outlines why you’re testing it and what results you expect to see.

While not essential, having a hypothesis helps clarify your goals and makes it easier to evaluate the experiment’s outcome later on.

For example, suppose you want to test ad copy written in the first person rather than the third person.

You might hypothesize that first-person language will create a sense of warmth and familiarity, making the ad more personable and potentially increasing the click-through rate (CTR).

To decide what to test, consider the metrics you want to improve or your specific challenges. For instance:

- If you’re seeing high form abandonment rates, you could test a landing page with fewer form fields.

- If new competitors have entered your auction insights, you might test ad copy that emphasizes unique selling points (USPs) to stand out more effectively in search results.

You can also experiment with creative choices, such as testing different color themes on landing pages, to see if a particular aesthetic performs better.

By strategically selecting elements to test, you can focus your efforts on areas that have the potential to have the biggest impact on your campaign results.

How to split test in PPC

Thankfully, most of the major platforms, including Google Ads, Microsoft Advertising and LinkedIn Ads, offer some form of experimentation tool, making split testing much easier.

Let’s examine how you would split test two different landing pages for your Google Ads search campaign.

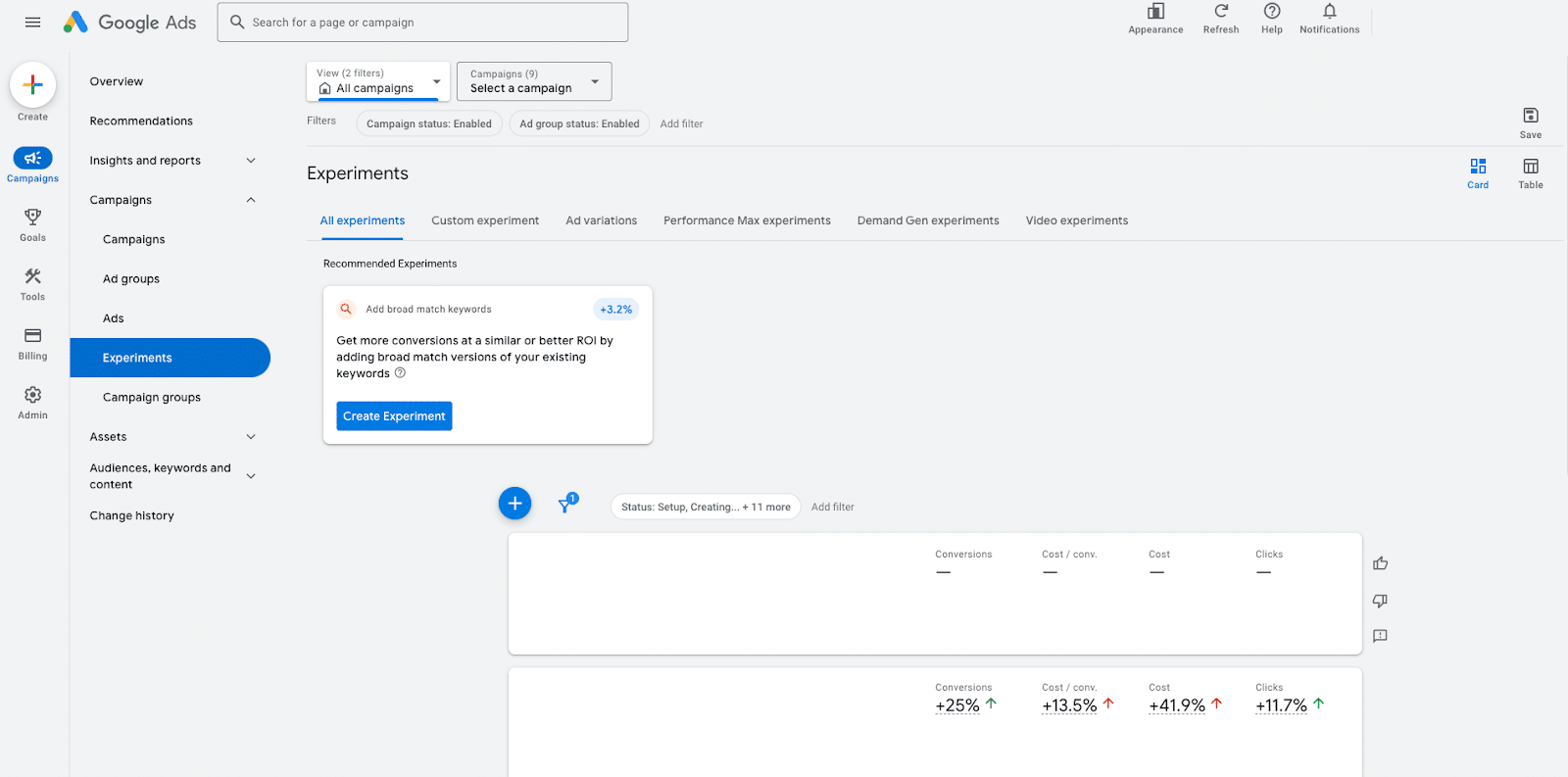

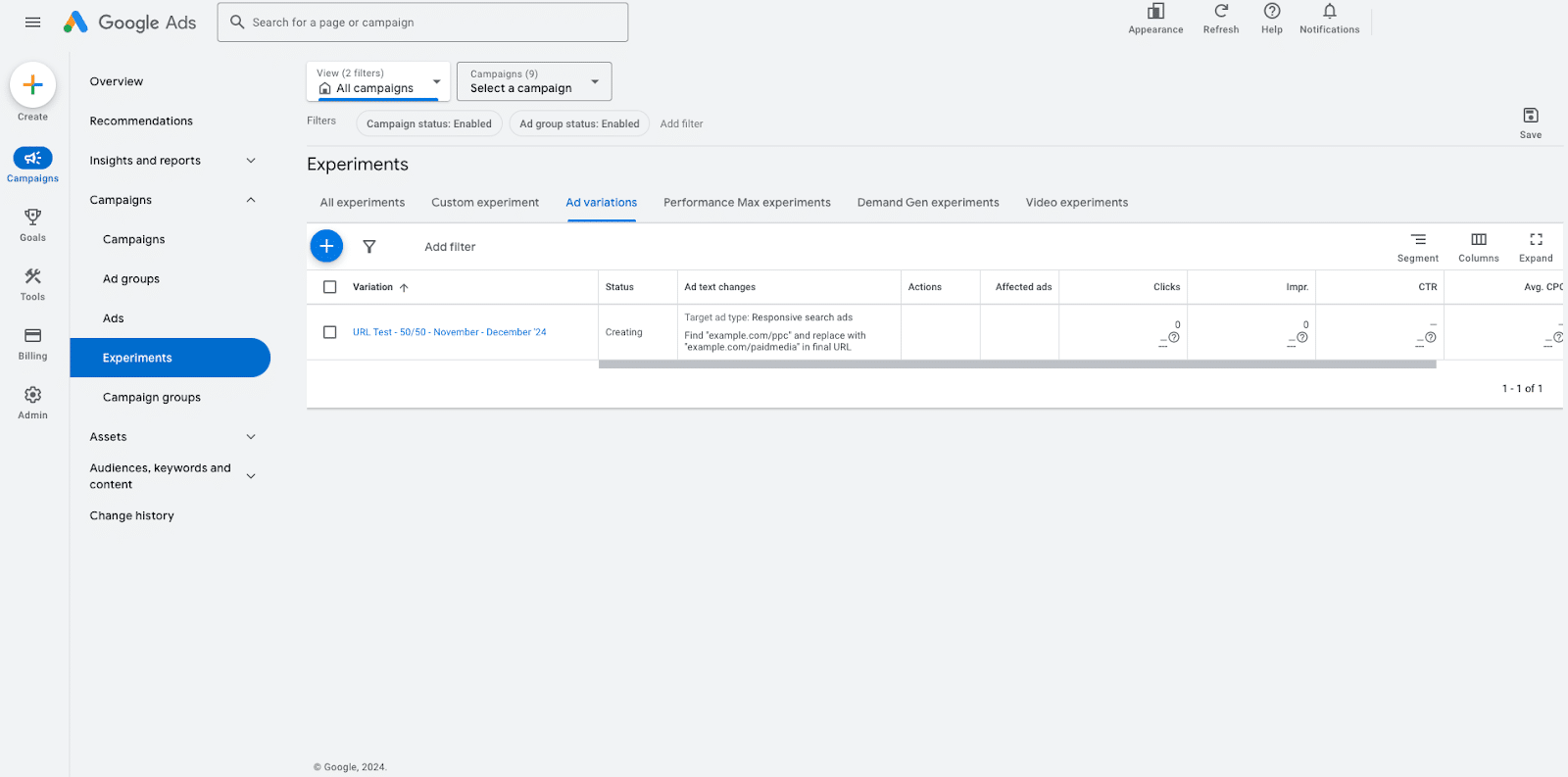

Step 1: Open the Experiment feature, now housed under the Campaign tab in the right-hand navigation bar.

While here, check to see if any experiments have been run in the account before and what those experiments showed.

Also, double-check to ensure that none are still unknowingly running in the background.

Step 2: For this experiment, select Ad variations and click ‘+’.

As you will see, there are many other experiment options, including Performance Max and Video, should you wish to test them, too.

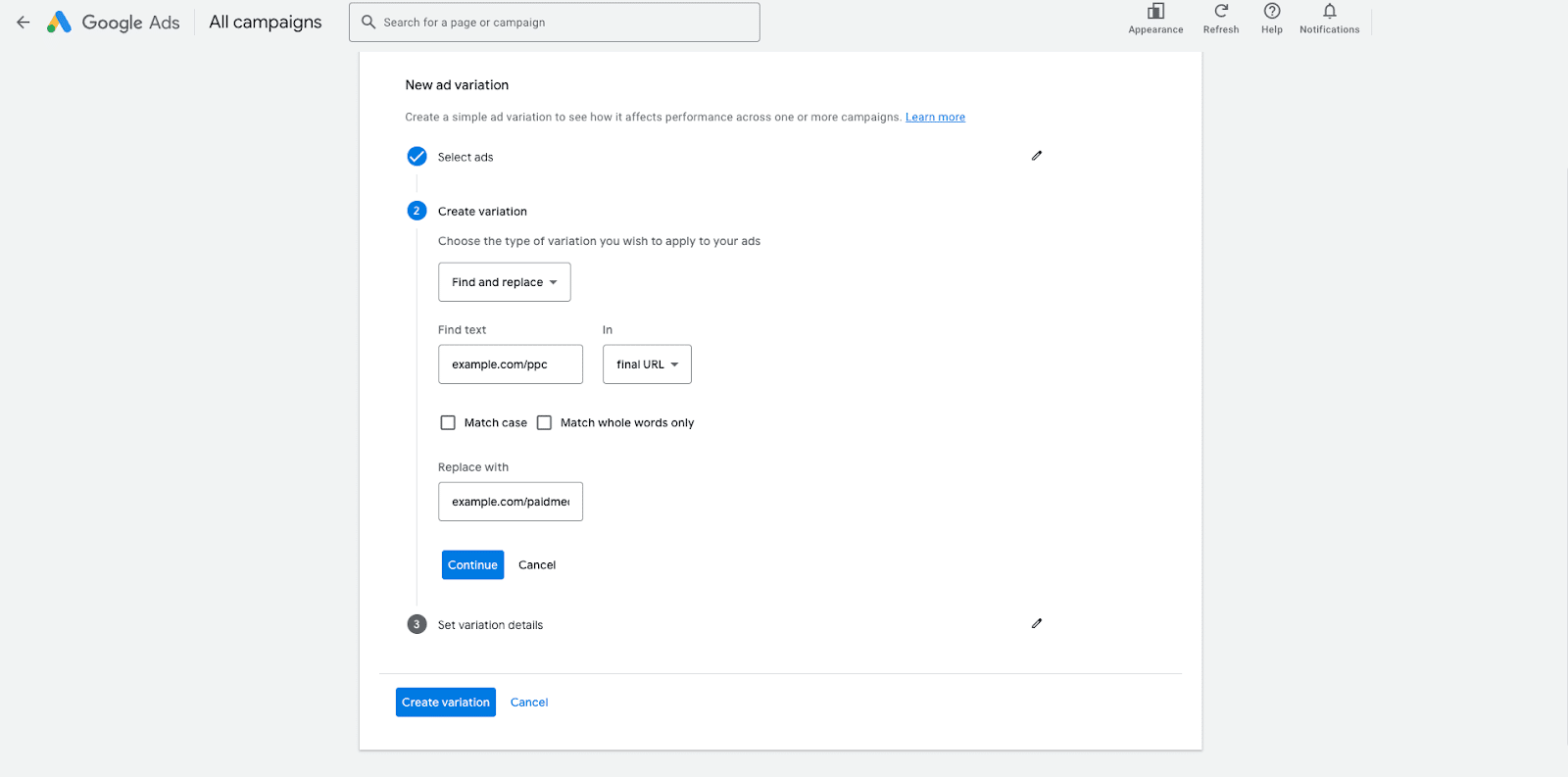

Step 3: Define the specific campaigns you want to include in your experiment.

You can choose to run the test on particular campaigns or apply it to all campaigns in your account.

Additionally, you can filter the experiment to target only certain ads or leave this filter blank to test all enabled ads within the selected campaigns.

Next, specify the exact aspect of the ad you want to test and the specific change you plan to make.

For this example, I want to find and replace any final URLs for www.example.com/ppc with a new URL for www.example.com/paidsearch.

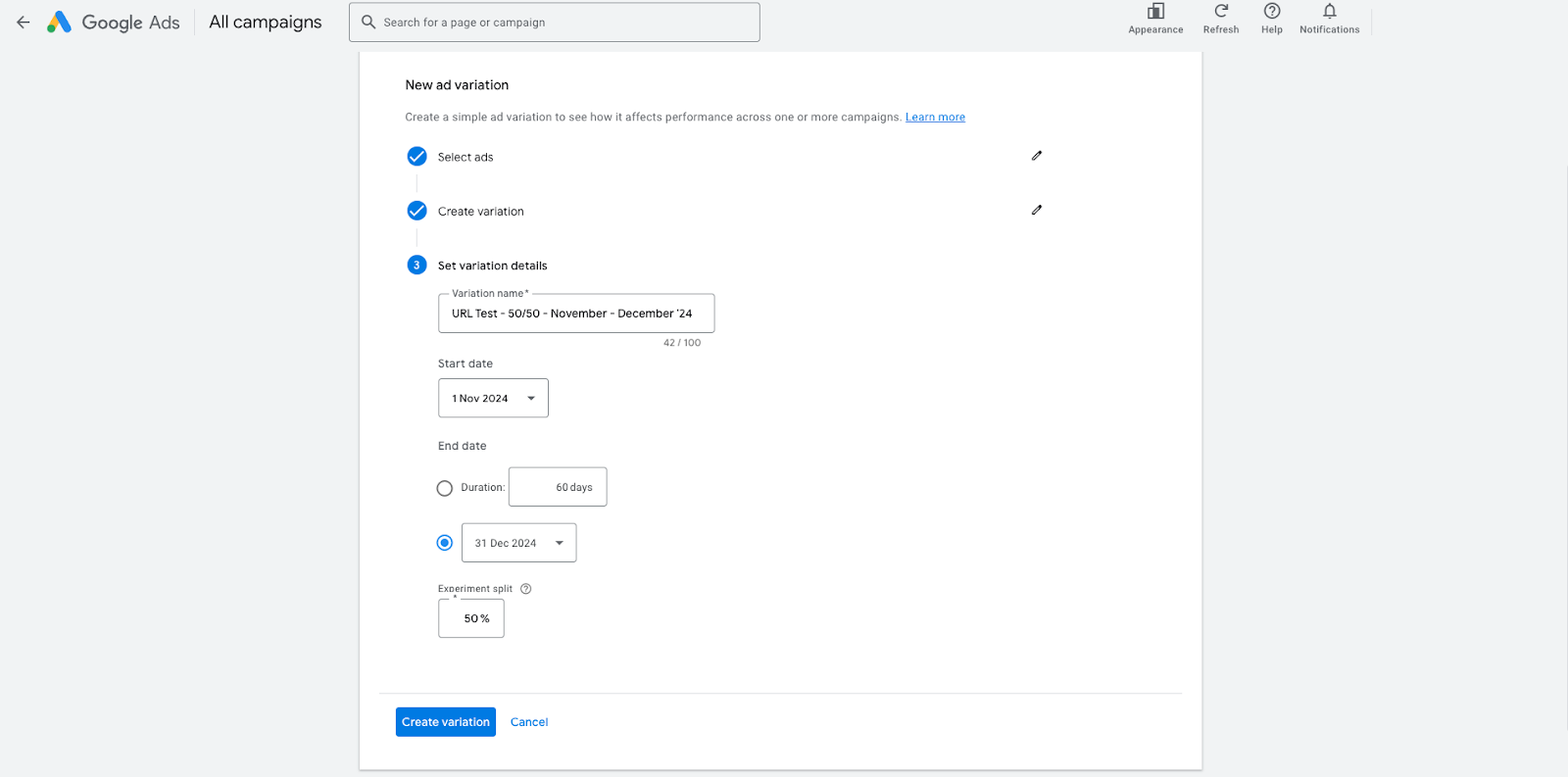

The final step is to set the variation details, where you decide how much you’d like to split the traffic and how long you’d like the experiment to run.

For this test, I am setting it to run for the whole of November and December, and I want it to be a 50/50 split between sending traffic to www.example.com/ppc with a new URL for www.example.com/paidsearch.

Now, my experiment is all set up and ready to go!

While the layout and design of the Experiment section in other platforms, such as Microsoft Advertising and LinkedIn Ads, are slightly different, the steps remain the same.

Split testing tips

Here are some tips for split testing success:

- Give your experiment a name that indicates the criteria you are testing. It will be much easier down the line to identify exactly what an experiment was through its name alone.

- Keep an eye out for performance during your experiment period. Don’t just set it and forget it. If you start to see any significantly concerning results that are negatively impacting the account, you may need to consider pausing the experiment while you investigate what could be happening.

- Try to be patient to ensure that the experiment has enough traffic to give you a decision based on performance data. If you have a campaign with a low volume of traffic, this may mean you need to run your experiment a bit longer than you’d expect to generate enough data.

Dig deeper: A/B testing mistakes PPC marketers make and how to fix them

How to evaluate performance

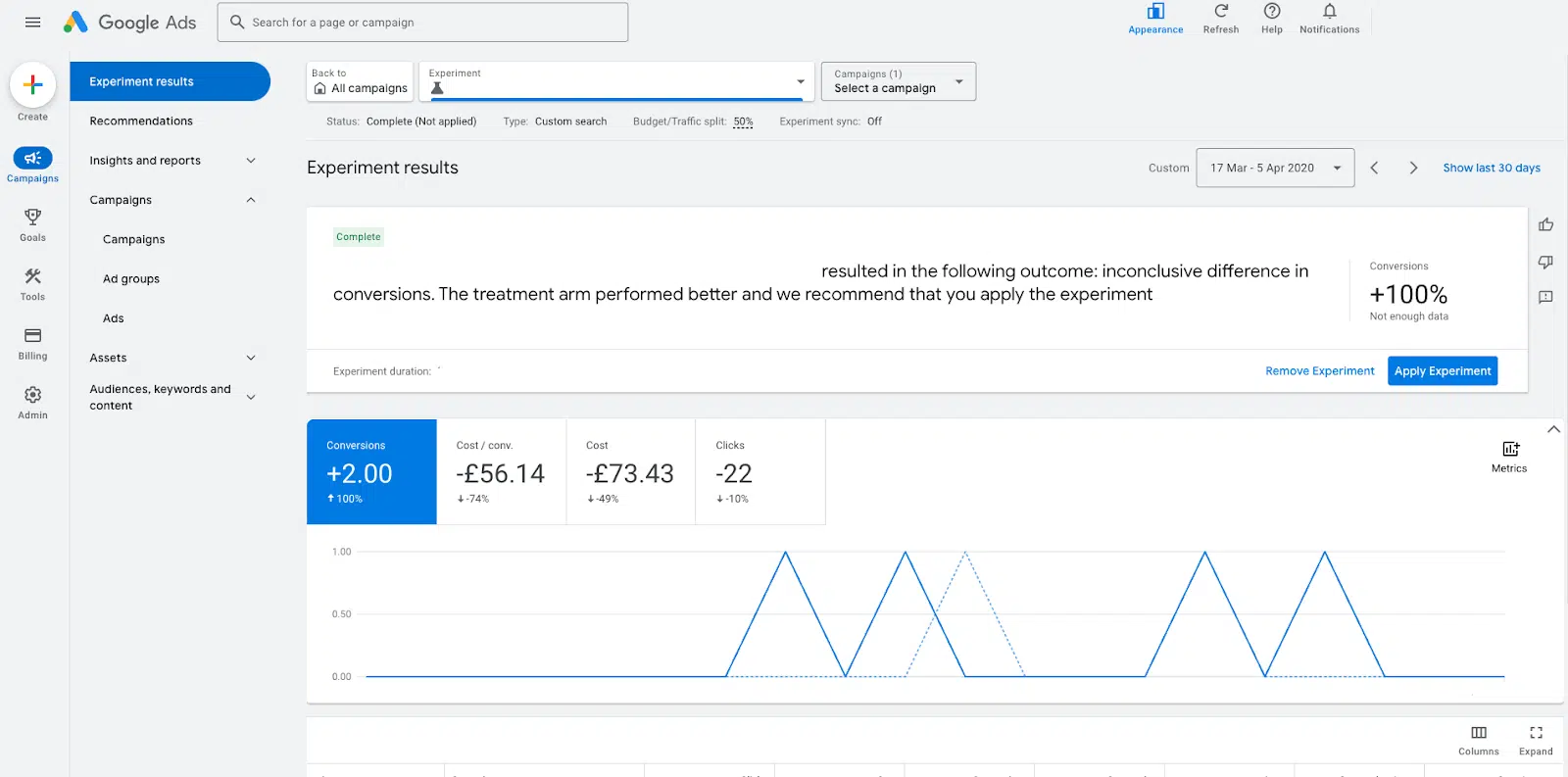

Once your experiment period has ended (or if you choose to end it early), you must decide whether you want to keep the changes you have tested or keep your campaign as it is.

Most platforms will provide you with some experiment results, which can help you make this decision. This will typically state whether or not the variable experiment resulted in better results than your current variable and how exactly it performed differently.

However, the final deciding factor is whether or not you are happy with the results.

If you aren’t satisfied with the results or still feel that your variable needs tweaking, you can return to the planning stage and try again.

You may also see that the platform states that not enough data was generated to make a conclusive decision.

In instances like this, consider running the experiment again for a longer period or reducing any restrictions you may have put in place, such as only applying it to certain ads within a campaign.

Maximizing PPC performance with split testing

Split testing in PPC is a fantastic way to improve the performance of your campaigns and test out new ideas or hypotheses.

With more platforms adding an Experiments section, it’s easier than ever to start testing your theories.