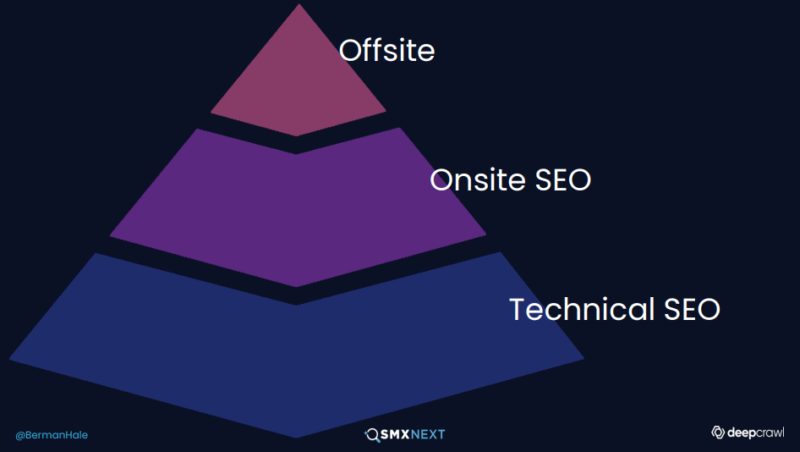

Technical SEO is vital to keep your website healthy. At SMX Next, Ashley Berman Hale shows how marketers can review their site’s crawl stats, rendering and indexing.

“Technical SEO needs to be aware of and support all functions of the business that have anything to do with the website,” said Ashley Berman Hale, VP, professional services at Deepcrawl, during her session at SMX Next. “We are nothing if we don’t have the support, the buy-in and the understanding of the challenges of our colleagues and what they need.”

This need to be aware of other digital business functions highlights technical SEO’s importance in organizations. Without solid technical practices in place, other parts of the website will experience issues, which could affect your bottom line.

“You have to have a return on your investment,” she said. “It has to be sustainable — you have to be able to grow in a way that allows you to not just manage tech debt, but to innovate and become best in class. That’s what you need to succeed in organic search.”

Taking control of your website means pinpointing the most pressing technical issues. Here are three ways Hale recommends marketers check their site’s SEO health.

Analyze website crawling

“Crawling is driven by links, it’s how the Internet works,” Hale said. ”It’s one of the most powerful assets you have when working on your site. Your links are a way for you to determine what pages are the most important content, and not all of your votes are created equal.”

“There is a way for you to heavily optimize and influence what Google sees as the most important pages of your site and where it [Google] should be driving that traffic,” she added.

Hale recommends performing a technical link audit of your website to determine how much priority it’s giving to specific links. Rather than reviewing links coming to your site (backlinks), this analysis shows you where your links are headed, what anchor text is used and more.

Once you know how your site links are organized, it’s a good idea to review log files and crawl stats. These show how Google and other search engines interpret these signals.

“It’s great to see where Google is spending time,” she said. “Look in GSC [Google Search Console] — the crawl stats area and the coverage report — then test individual URLs.”

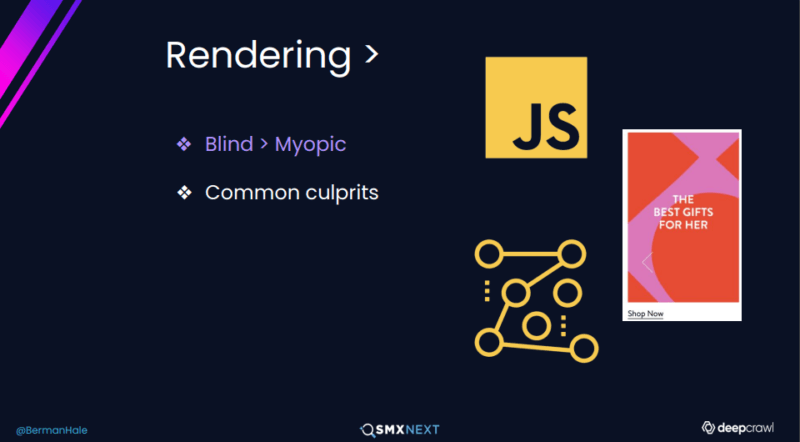

Ensure search engines are rendering pages correctly

Website crawling is just one piece of the technical SEO puzzle — crawlers need to render those pages. If your site content isn’t optimized for those bots, they won’t see it and it may not be rendered correctly. To avoid this, marketers need to present their content in formats that both searchers and crawlers can view.

“Anything that requires a click from the user or needs the user’s engagement is going to be difficult if not impossible for bots to get to,” Hale said. “Go to your most popular pages, drop some important content in quotes in Google and then see if they have it.”

“Another thing that you can do to see rendering is to use the mobile-friendly tool in Google to give you a nice snapshot,” she added.

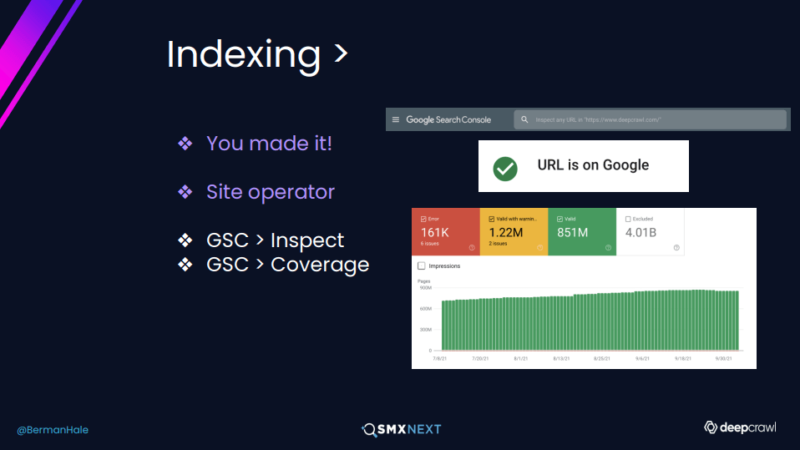

Review indexing for your site’s pages

Once you know Google and other search engines are crawling and rendering your site correctly, spend some time reviewing your indexed pages. This can give you one of the clearest pictures of your site’s health, highlighting which pages were chosen, which were excluded and why the search engine made those decisions.

“You can check everything that Google sees, which is insightful,” said Hale, “ While half of our battle is getting the good stuff into the index, the other half can be getting the bad stuff out.”

Hale recommends reviewing the Coverage report in GSC, “which gives you some broad generalizations. You can see a few example URLs for that inspector at the top — it gives you lots of data on each page, including if it’s indexed.”